Effective Semantics Intro

The Context

Automated reasoning has experienced a revolution over the past 100 years.

In stages, roughly:

- Electronic computers (“computers”, “phones”, etc) replaced mechanical calculating tools.

- Stanzas of type theories (“programs”) replaced many clerical jobs.

- Probability distributions over manifolds (“deep neural networks”) are replacing many “creative” jobs.

What is “Effective Semantics”?

Effective semantics is an attempt to unify the “program” approach with the “neural network” approach, to allow for creative generation of effective type theories in which an automated reasoning agent can propose ideas and evaluate their appropriateness. This is an attempt to generalize and formalize the scientific process to a degree in which it can be executed by computers.

The Big Idea

Effective semantics is based on several existing bodies of research. The main innovation here is the particular path from effective theories to images which represent the theory. I believe this path is bi-directional and this approach will allow for extracting semantic meaning from tensor images.

The path is roughly:

- Formalize the language of your theory into some formal semantics;

- Translate those into the language of homotopy type theory;

- Convert that into cubical type theory, as diagrams;

- Which can be represented as cubical sheaves, which are decorated hypergraphs, which are adjacency tenseors + data;

- The data of an adjacency tensor can also be interpreted as a digital image.

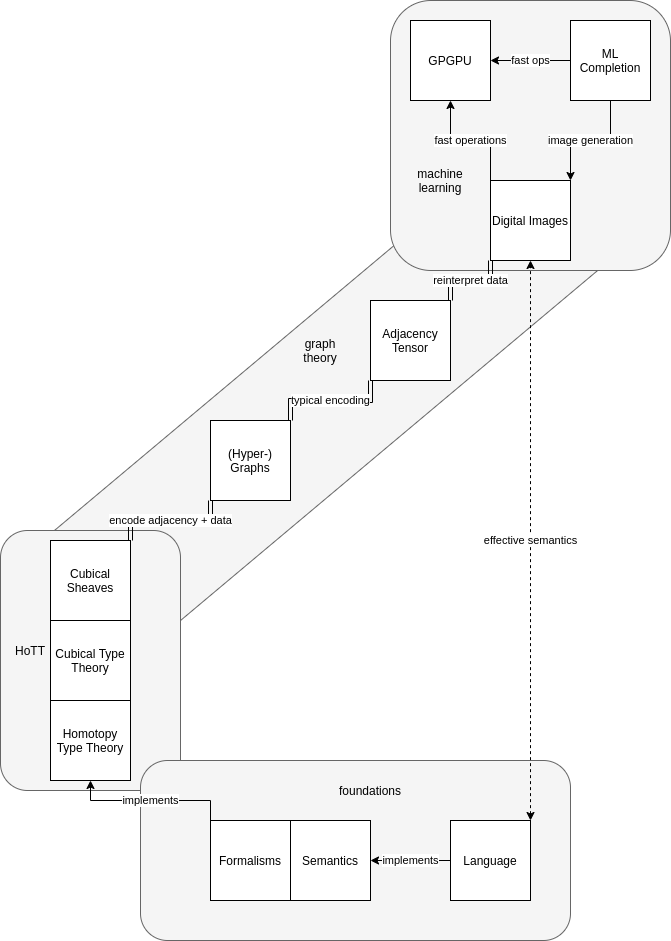

Schematically:

There are three areas of mathematics involved here:

- foundations and homotopy type theory (HoTT));

- graph theory and graph representations as tensors; and,

- applied mathematics used by machine learning.

Foundations cover both defining your domain language in formal terms (formalisms), and the semantics of those formal terms as mathematical objects amenable to calculation (HoTT).

Graph theory is used to convert an implementation of homotopy type theory, cubical type theory into digital images.

These digital images can then be used for two benefits:

- they can be quickly operated on using GPUs, allowing fast categorical diagram chasing; and,

- they can be “completed” using image learning.

This allows for “guessing” the proof completion and then rapidly validating it.

Research Plan

My background is in industry, so my research approach is based on a sequence of applied projects which advance the underlying technology.

Implement Fast Cubical Type Theory

Implement the basic techniques of digital image processing and sheaf encoding.

Outlined as projects:

- Write hypergraph as tensor libraries for GPGPU. [In progress.]

- Write CuTT library for GPGPU based on the above. [In progress.]

- Implement HoTT library for GPGPU based on CuTT library.

- If possible, connect to existing proofs engines.

- If not, transcode Brunerie’s proofs.

- Capstone: Validate that Brunerie’s number is 2.

- Stretch: Encode the objects requested by Scholze.

Implement Image-to-Theory Extraction

This is the inverse of the previous section – connecting digital images to effective theories describing their structure.

This consists of two main phases:

- Implementing the topology features needed.

- Building a theory to describe a set of features.

Outlined at projects:

- Implement covering based topological data analysis, similar to Michael Robinson – but using cubical rather than simplical constructs. This should use the topological structures from above.

- Implement hypercube covering based ML using above hypergraph library. This looks like creating a covering that “minimizes” complexity for some level of predictive power.

- Capstone: Topological AI go bot.

- Stretch: Topological analysis of code trace images.

Long Term Goals

There are a few projects that serve as directional goals for the research.

AI Guided Proof Completion

Use machine learning techniques to suggest completions of mathematical proofs.

My goal is to enable suggestions of completions to cubical type theory proofs via machine learning on the digital image encodings of the partial proof and suggestions of statements in CuTT. One complication here is suggesting valid statements in CuTT, from the digital image. However, the tree pruning based on rules used by DNNs like AlphaZero suggests that this behavior can be “learned”, at least to some level of complexity.

This is an open research question, and at the heart of effective semantics: the AI is attempting to suggest semantically valid completions to partial mathematical statements, with the hope that these generate interesting semantic structures.

Synthetic Model Reasoning

Use synthetic theories of a domain, eg finance, to model system behavior and validate software conforms to “finance rules”.

This is an application of the fast validation engines, but the main work is embedding models, one of finance and one of software, into a metatheory that allows you to reason about them jointly.

Metacognitive AI

This one is more nebulous, but is the long term research agenda: teaching an AI to reason about its own “state of mind”.

The current strategy is to create an AI which can combine two ideas –

- a task specific effective theory AI, eg a go playing AI; and,

- a mathematical AI capable of reasoning about effective theories.

My hope is that recent innovations such as parametric cubical type theory introduce constructs that allow representing an AI’s internal semantics within a type theory that’s easy to encode into GPU structures interpretable as digital images. This will have to leverage the work around synethic analysis and topology to embed the manifolds/coverings learned by the AI as semantic structures to be reasoned about.

Z. Michael Gehlke

Z. Michael Gehlke